- Intel Shared GPU reminiscence advantages LLMs

- Expanded VRAM swimming pools enable smoother execution of AI workloads

- Some video games decelerate when the reminiscence expands

Intel has added a brand new functionality to its Core Extremely methods which echoes an earlier transfer from AMD.

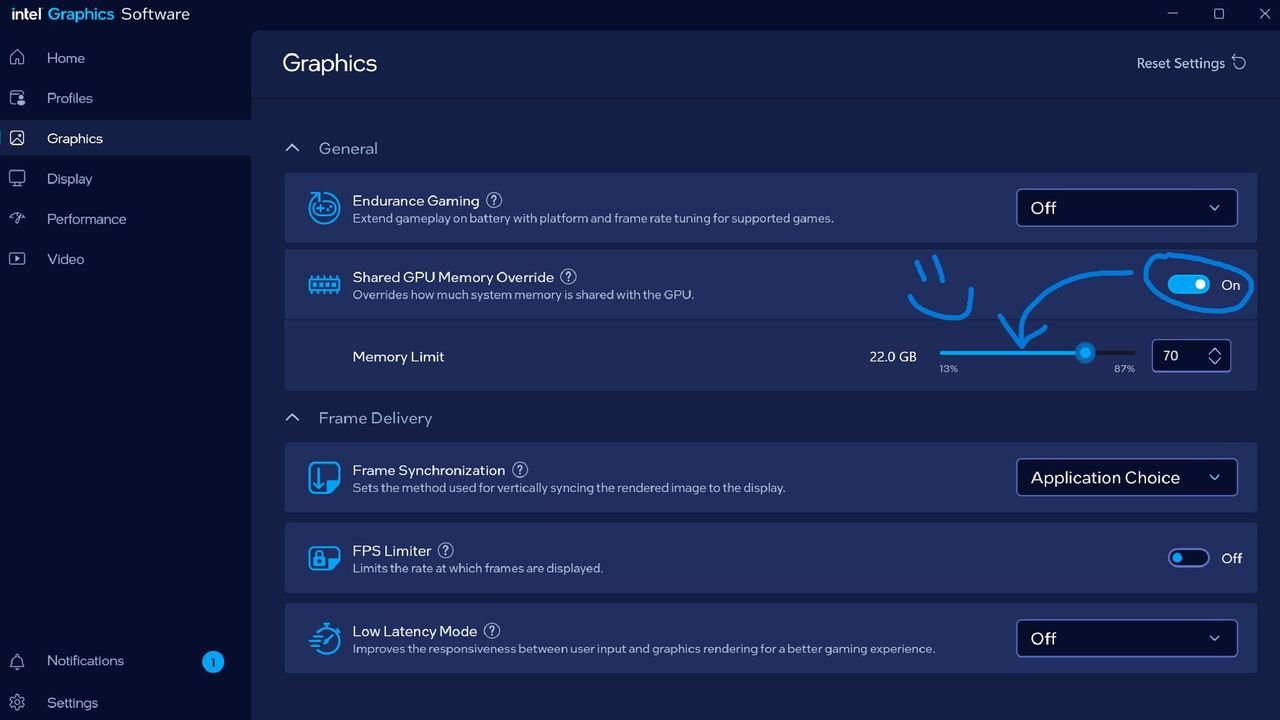

The function, referred to as "Shared GPU Reminiscence Override," permits customers to allocate further system RAM to be used by built-in graphics.

This growth is focused at machines that depend on built-in options fairly than discrete GPUs, a class that features many compact laptops and cellular workstation fashions.

Reminiscence allocation and gaming efficiency

The change is offered as a approach of enhancing system flexibility, notably for customers excited about AI instruments and workloads that rely upon reminiscence availability.

The introduction of additional shared reminiscence is just not mechanically a profit for each software, as testing has proven that some video games might load bigger textures if extra reminiscence is obtainable, which might truly trigger efficiency to dip fairly than enhance.

AMD’s earlier "Variable Graphics Reminiscence" was framed largely as a gaming enhancement, particularly when mixed with AFMF.

Signal as much as the TechRadar Professional publication to get all the highest information, opinion, options and steering your online business must succeed!

That mixture allowed extra sport belongings to be saved straight in reminiscence, which typically produced measurable features.

Though the affect was not common, outcomes different relying on the software program in query.

Intel’s adoption of a comparable system suggests it’s eager to stay aggressive, though skepticism stays over how broadly it can profit on a regular basis customers.

Whereas players might even see blended outcomes, these working with native fashions may stand to achieve extra from Intel’s method.

Operating giant language fashions regionally is turning into more and more widespread, and these workloads are sometimes restricted by accessible reminiscence.

By extending the pool of RAM accessible to built-in graphics, Intel is positioning its methods to deal with bigger fashions that will in any other case be constrained.

This may increasingly enable customers to dump extra of the mannequin onto VRAM, lowering bottlenecks and bettering stability when working AI instruments.

For researchers and builders with out entry to a discrete GPU, this might provide a modest however helpful enchancment.